Analyzing And Learning From Your A/B Experimentation Results

What to do when things go right…or wrong

If you ask some of the most successful optimizers what they believe is the most crucial aspect of CRO, most of them would say it’s about learning and analyzing from concluded tests.

Experimentation isn’t just about testing rapidly, getting winners on your A/B test, and implementing the variation on your website. It’s about ensuring you’re improving your website and mobile app users’ experience – day in and day out.

Rather than being disappointed after a test you believed in, it’s important to dive deep into knowing what went wrong. It could be an operational error or something else, but whatever it is, you need to figure it out, share it with your team, document it for the future, and instill the learnings.

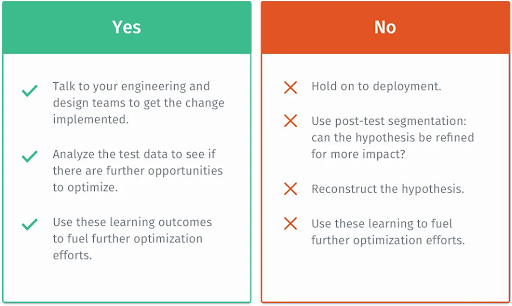

There are two probable outcomes to you running a test as shown in the graphic below.

When Your Variation Has Won

Congratulations, your efforts have paid off. But what’s next? This is when you need to get answers to the following two questions:

- What is the cost of deploying the change(s) in terms of engineering hours, design hours, and so forth?

- Is the expected increase in the revenue doing justice to the actual cost involved?

When Your Variation Has Lost

We can’t win all the time. And sometimes, a test that didn’t work can be just as revealing as one that did. In these cases, make sure that you:

- Analyze your research, check your hypothesis and look for loopholes.

- Study your test data. Segregate it further to examine the insights.

- Validate your research data with all the data-gathering tools used.

- Go through all the relevant case studies. They could help you come across new perspectives which you’d missed before.

- Reconstruct your hypothesis by accommodating new insights that you missed in your initial research.

- Go back and test again. (In other words, don’t give up.)

When There’s No Difference Between Test Variations

There could be cases when your outcome reveals there is ‘no significant difference’ between the two variations. At this point, you might be wondering if you should move on to something else.

Not so fast. Consider the following before you throw in the towel.

1) Your test hypothesis might have been right, but the test setup/implementation could have been poor.

- We all make mistakes. Even the most experienced optimizers run into issues like these all the time. But how do you solve this? By building processes that prioritize operational excellence over anything else.

2) Just because there was no difference overall, the treatment might have better control in a segment or two.

- It could be that you got a lift in your target metric for a segment or two: returning visitors and mobile visitors, but there was a drop in new visitors and desktop users. Those segments might cancel each other out and it might seem like it’s a case of “no difference.”

- In reality, you need to dig deeper into your data to figure out exactly this. And ensure you’re running the test again, individually, for both, the winning segments of users and the ones that didn’t perform better.

Experimentation Is Key

The digital world is evolving at breakneck speed. The only real competitive advantage brands have over their peers is the experience they provide to their users and customers. The onus is on marketers to deliver an experience that is different, relevant, contextual and, most of all, enriching. Experimentation will help you get there.

The companies redefining brand experience are those that have embraced risk, innovation, and experimentation. It’s become the new playbook for growth. And it’s easier than you think. In our latest white paper, “The Case For Experimentation: The What, Why & How”, we’ll take you step-by-step through the entire process.

Missed our first or second blogs in this series? Read blog 1 here and blog 2 here.